PAC’s Predictions for 2024

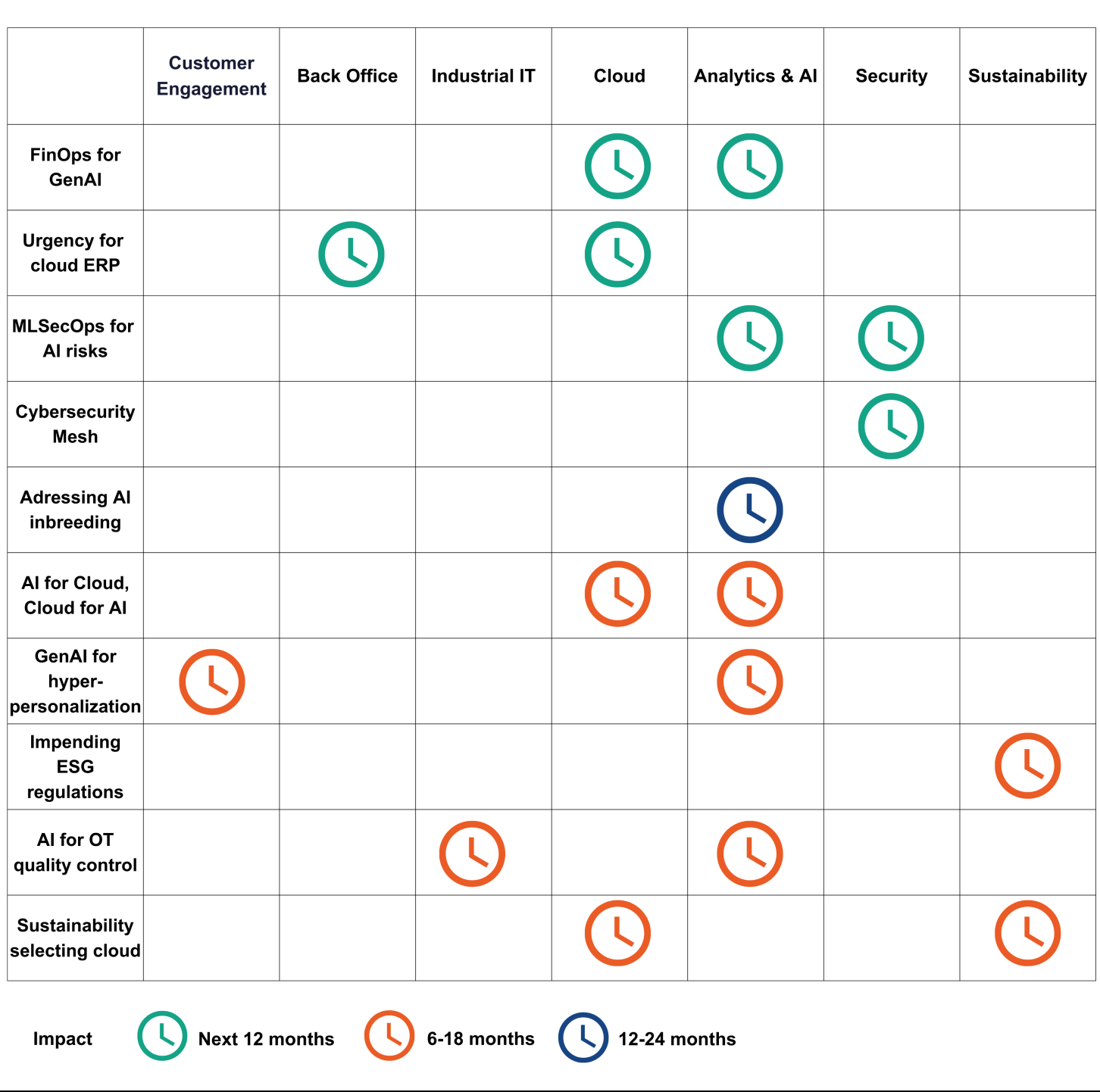

Towards the end of each year, PAC analysts publish their “top predictions” for the following year(s). They highlight trends that are expected to impact the IT market in various ways – through above-average growth, huge business volumes, or shifts in demand or supply.

As expected, AI plays a dominant role in these predictions this year.

The critical role of FinOps to scale cloud-based AI capabilities

As organizations have been adopting cloud solutions and services, they are gradually transitioning to hybrid and multi-cloud operating models. However, despite all the opportunities the cloud provides, many organizations are challenged by spiraling operational costs.

Over the past two years, PAC has seen a significant increase in awareness and adoption of FinOps as a means to manage the sprawl of the cloud cost-effectively, and now with the advent of generative AI (GenAI), PAC considers organizations to be at another FinOps inflection point.

2023 has been a tipping point for AI because of the amount of focus generative AI (GenAI) has had since the release of ChatGPT around a year ago, which raised broad public awareness of the exponentially growing capabilities of AI. PAC considers GenAI to be what the tech industry calls a “killer application” in that it is an innovation for which the use case impacts both the personal and professional lives of people at scale across the globe.

This has understandably led to an “arms race” in 2023 across the tech industry, with software companies reacting by focusing their messaging on GenAI and promoting how they are building it into their solutions and services.

From PAC’s perspective, this has broadly led to a tech industry culture in 2023 of “The answer is GenAI; now what is the question?”. However, the nature of large language model (LLM) based AI like GenAI requires high levels of computing and data processing that are only affordable at scale delivered through a cloud service.

Currently, the main cost models for GenAI are based on either a token or character models with another pricing dimension based on the foundational AI model used, ergo its sophistication for a specific task like ChatGPT 4. A further GenAI cloud pricing nuance is based on the input and the output provided, where the costs can vary depending on the type and amount of content generated.

Given the relatively nascent nature of GenAI cloud services, PAC considers there to be a significant level of risk to organizations where the demand for such services will drive unexpected increases in costs at a magnitude and pace far worse than was experienced during the initial cost issues of cloud sprawl that occurred.

This means, from PAC’s experience regarding cloud sprawl, that there is potential for organizations to see spikes in AI costs not thoroughly cost-managed across their business functions. This could lead to costs spiraling out of control in situations where AI cloud service usage could increase unexpectedly if not financially managed centrally.

PAC predicts this is where FinOps becomes critical for CxOs to adopt or adapt a culture of financial accountability and transparency, optimize cloud service expenditures, effectively forecast demand and ensure subscriptions are costed accordingly, and reduce operating costs relating to GenAI.

Migration to state-of-the-art ERP in the cloud

Cloud deployment models have already been widely adopted in many areas of business applications. For example, public cloud deployment models have been established for years in customer relationship management (CRM), human capital management (HCM), and content management. Customers, including large corporations, have long overcome their reservations about public cloud deployment models.

This has not been the case for critical business processes such as vertical production and ERP. However, for some time now, we have observed an increase in migrations to cloud-based deployment models. This applies to SAP environments as well as Oracle applications and some vertical applications such as Guidewire in the insurance sector and Avaloq and Temenos in the banking sector.

This trend is driven by two market developments, one on the user side and the other on the supplier side: the requirements of digital transformation and the shortage of skilled workers are forcing user companies to modernize their application landscapes to introduce innovation faster, automate processes, and adapt business models more quickly.

Against this background, customers are increasingly asking for cloud-based deployment models, which relieve them of application operation and investment in application modernization. In addition, companies want to make better use of the benefits of cloud-based applications for their business models, such as increased agility, flexibility, IT security, and access to innovations such as process automation, GenAI, and machine learning.

On the supplier side, software providers are paving the way for better cloud utilization: they have optimized their applications for cloud operation, and they are fueling cloud demand with contract and sales incentives. Microsoft, for example, made the architecture of its Dynamics applications cloud-native several years ago. Oracle, too, has completely overhauled its ERP applications and optimized them for operation in the Oracle Cloud Infrastructure public cloud.

This change can be observed most clearly in the SAP market. SAP prefers public cloud deployment models for the further development and integration of innovation in its various SAP S/4HANA editions. The RISE and GROW programs also promote deployment models based on a public SaaS edition or a private cloud in a hosted public IaaS infrastructure. All in all, we observe increased openness to public cloud deployment models in the SAP market.

The stock market rewards cloud-driven business models as they deliver recurring revenue that provides a constant, annually growing base. Microsoft, in particular, leaves its customers little choice but to opt for a subscription-based model. User companies seem to have accepted this; they are warming to the advantages of the cloud model, such as regular functional updates, data center operation by the provider, and the security measures implemented by the cloud operators – an aspect that should not be underestimated.

The rise of MLSecOps to anticipate the vulnerabilities of multi-hop AI

2023 has been the year where mainstream awareness of AI, particularly generative AI (GenAI), has exponentially increased for people in their personal and professional lives. PAC is already witnessing organizations being challenged regarding how they use AI at scale as a commodity technology across a plethora of IT solutions and services in support of business operating models.

As the use of machine learning (ML), and now deep learning (DL), has grown in organizations over recent years, it has led to a need for a better workflow pipeline between ML teams and IT operations, akin to the adoption of DevOps. Given the data focus of AI, it has exposed the importance of embedding security practices in ML-related workflows. This has led to the growing adoption of MLSecOps practices within organizations to ensure AI usage is as well managed as coding practices that DevOps supports.

As is often the case with disruptive and innovative technology like GenAI, there is an acceleration in experimentation and adoption that can quickly become considered a critical or at least essential core business tool. This type of rapid technology consideration and adoption, from PAC’s experience, often outpaces its impact on an organization’s cyber-security operating model. All too commonly leading to a catch-up period and increased risk exposure for organisations that have not adapted their cybersecurity strategy in parallel with their AI strategy.

A common pattern PAC is witnessing currently when speaking with CxOs is the siloed use of AI for specific business service and operational tasks. However, PAC is concerned that leaders in organizations are not anticipating a highly complex issue that will grow exponentially in the coming years. As organizations adjust their processes to leverage a wide range of AI services, the occurrence of multi-hop AI will grow rapidly.

PAC defines multi-hop AIs as the chaining together of multiple AI solutions or services and their data sets into an integrated pipeline or supply chain, with each “hop” leveraging a different, often cloud-based, AI solution or service. This AI usage will span solutions and services from a wide range of IT suppliers. It will not have any human interaction between the intermediate “hops,” with humans providing the initial input and then receiving the multi-hop output.

PAC considers this a revolutionary step in using AI that will further accelerate its value for organizations. However, as with every technology innovation, it will expose organizations to a range of previously unknown cybersecurity threats and/or the evolution of existing attack vectors applied to multi-hop AI interactions.

This is where PAC predicts that MLSecOps frameworks will evolve further to become critical to securing both bespoke and off-the-shelf AI solutions and services as they evolve into a highly complicated mesh of multi-hop AI operating models. Data is the most valuable commodity for bad actors to acquire from a cybersecurity perspective, and it being at the heart of all things AI, this is an area that CxOs must address over the coming years.

The cybersecurity mesh will see a breakthrough in 2024

Today, companies use 40 to 50 different security solutions from third-party vendors. Data exchange between these solutions is usually difficult to establish. That is why many companies seek to significantly simplify data integration while reducing the number of software providers without becoming too dependent on individual suppliers. One possible solution is security mesh architectures.

A security mesh is a composable and scalable approach to extending security controls, even to widely distributed assets. Its flexibility is well suited for increasingly modular models that align with hybrid multi-cloud architectures.

It enables a more composable, flexible, and resilient security ecosystem. Rather than having each security tool run in a silo, a cybersecurity mesh allows tools to interoperate through several supportive layers, such as consolidated policy management, security intelligence, and identity fabric. In other words, the traditional siloed approach will be broken up, different security solutions will share information, and management will be centralized.

Security meshes are not a completely new security solution. Rather, they are an architectural overlay to existing security solutions, designed to:

- create a more dynamic environment for security across the network by allowing individual security services to communicate and integrate;

- provide a more scalable and flexible security response, making an organization’s security posture more agile;

- improve the defense posture by facilitating collaboration between analytical and integrated security solutions;

- create an environment where cybersecurity technology can be easily deployed and maintained.

As many organizations have already adopted Zero Trust Network Access over the past two years, cybersecurity talent is still scarce, and there is a need to consolidate cybersecurity solutions. Cybersecurity mesh will be a pull topic in 2024 from a security software vendor perspective as well as from a security services provider perspective, as those architectures need to be implemented and integrated into an operation model.

Addressing AI inbreeding through the application of responsible and explainable AI

Whilst 2023 is the year in which AI awareness accelerated across the general public globally, the use of the technology has been growing year on year for the past decade. As the evolution of AI has occurred, an issue with the technology that is critical to address has also increased in prominence.

Volumes of data are core to the successful use of AI across a range of organizational process scenarios. The rapid awareness and growth of generative AI (GenAI), a large language model (LLM) type, has spotlighted the concept of AI inbreeding this year.

For mammals inbreeding relates to genetic corruption from members of a population reproducing with others who are too genetically similar. This amplifies the expression of recessive genes in the form of significant health problems and other deformities.

Over time, from indicators PAC has seen, a digital form of inbreeding has a high likelihood of occurring and impacting the long-term effectiveness of AI solutions and services. The focus on GenAI in 2023 is a prime example of AI’s evolutionary challenge.

While we are up to, at the point of this being published, a fourth version of the ChatGPT model from a PAC perspective, this would still be considered a first-generation level technology in its field because it has been trained on a relatively clean range of predominantly human-generated data points that reflects human sentiment and sensibilities.

However, as single and multi-hop AI workflows generate more and more data, society will very rapidly be in a position where AIs are predominantly consuming content produced by other AIs that may vary over many processing iterations from relevance to human societal perspectives. Essentially, sophisticated AIs will be processing data that is emulated by human culture and not direct human culture.

This behavior would be the AI equivalent of inbreeding, providing results not based on reality but a distorted perspective. One researcher on X/Twitter referred to this phenomenon amusingly as “Hapsburg AI.” All jokes aside, PAC considers this a very serious warning sign for a potential future that could have severe effects on the benefit of AI both for organizations and society.

This is why PAC predicts the role of responsible AI (RAI) as a framework and explainable AI (XAI), as technology will become critical to manage and address the potential of AI inbreeding for all the subsequent generations from 2024 and beyond.

AI capabilities will massively impact the future cloud vendor landscape

AI use cases like generative AI (GenAI) require large amounts of computing power, which is only affordable at scale, typically delivered through a cloud model. Today’s consumer-centric GenAI solutions are therefore dominated by public cloud-based solutions trained with data publicly available on the Internet.

However, there will be a clear distinction between private and enterprise use cases in the AI market. AI models deployed in a professional context not only have to meet the requirement of high quality of the underlying data; they also have to ensure that AI usage does not compromise the security and privacy of an organization’s potentially sensitive data.

At the same time, cloud consumption transparency and optimization are key for organizations as AI can potentially lead to uncontrolled and excessive cloud spending, similar to what we saw during the first wave of cloud migrations.

PAC therefore expects future AI services to be based on a multitude of public, private, and hybrid cloud models. The deployment model of choice will always be a trade-off between quality, sovereignty, costs, and, increasingly, sustainability. This will lead to a variety of offerings on the vendor side.

The hyperscale cloud providers today support the lion’s share of the AI market. They are experienced in using AI technology at scale in their core activities – e-commerce, search, video streaming, etc. Also, they aim to bind customers even more strongly to their platform and are hoping for massive increases in cloud consumption.

Most of the major SaaS platform vendors are enriching their software solutions with AI services to drive differentiation and customer loyalty.

Providers of hosted private cloud services are in the starting blocks to address particularly sensitive workloads with dedicated or even on-premises services. Local or regional hosting providers are addressing sovereignty and compliance issues. Technology vendors are addressing the increasing demand for on-site cloud infrastructures for privacy and latency reasons. Managed services providers are prepared to manage on-site and hybrid cloud landscapes.

Finally, consulting and systems integration providers are investing heavily in AI-related capabilities to be able to support clients with their AI journey, from use case-related consulting to selecting a suitable cloud model.

All this means that dedicated AI capabilities and offerings will impact not only the future success of large parts of the cloud vendor landscape but also the vendors’ delivery models in many areas.

The integral role of GenAI as the last piece of the DCE personal shopper jigsaw

The nascent potential of generative AI (GenAI) is of great value to organizations because use cases for it can occur across a multitude of business functions. To that end, digital customer engagement (DCE) within organizations is fertile ground for its potential use.

PAC considers that one of its key use cases will be in the form of an assistant, or as some companies call it, a co-pilot, as a part of digital experiences delivered directly to consumers or through devices used by employees to support in-person customer engagements.

For at least the past decade, organizations operating business-to-customer (B2C) channels, and more recently direct-to-consumer (D2C), have aspired to provide digital experiences akin to a personal shopper—the holy grail aiming to provide such precise personalization that the industry labeled this as hyper-personalization.

However, before GenAI the aspiration to achieve this was far higher than the reality of capability. This type of personalization aims to develop a stickier and more long-term relationship with customers that increases engagement and loyalty as well as cross and up-sell opportunities.

In this context, PAC considers the traditional role of a personal shopper to be a person who has analyzed their client to the extent that they can purchase a range of goods for them, knowing it is to their client’s taste. Understandably, due to the scale of costs, this type of service has historically been aimed at the luxury goods market for individuals of a certain level of wealth.

However, the ubiquitous growth of smartphones and 4G to 5G data connectivity, combined with the exponential rise in online digital experiences, led many organizations to aspire to offer a digital equivalent of a human personal shopper across all financial consumer tier types.

Prior to the recent broad societal awareness of GenAI, PAC has always considered a piece of the jigsaw puzzle regarding the digital personal shopper concept to be missing. Organizations that sell directly to consumers typically have tight margins, so it has taken many years of investment to unify the data they have on their customers from across internal channels, and source anonymized data from external parties to reinforce further the insight they have on purchasing behavioral patterns.

Additionally, before GenAI, ChatBot style interfaces were, in PAC’s opinion, exceedingly basic and were not sophisticated enough to provide purchasing advice or steer akin to that of a traditional personal shopper.

Buzzword phrases like hyper-personalized have been bandied about for years but were hampered by digital experiences not being able to engage at scale without significant back-office employee and system processing. However, PAC predicts that from 2024 onwards, the incorporation of GenAI into digital experiences on employee devices to support in-person engagements and through similar experiences directly to a customer’s devices.

In doing so, GenAI will democratize access to personal shopper-style digital assistant services for any consumer, simplifying the purchasing cycle of organizations and driving new revenue opportunities.

The noose is tightening around European companies and their sustainability strategies

Although there have been debates about the impact that humans have on the environment and the climate for over half a century, the past three years have seen the most substantial change as a result of these debates. The European Union has launched initiatives like the Green Deal to ensure that the continent will be climate-neutral by 2050. Among them, the Corporate Sustainability Reporting Directive (CSRD) is undoubtedly one of the most defining.

The CSRD requires that companies with business activities in Europe comply with the new European Sustainability Reporting Standards (ESRS) (adopted by the Commission in July 2023). It also strengthens regulations that cover reporting practices related to social and environmental information.

The policy follows the principle of double materiality, which obliges organizations to disclose how they are affected by environmental and social issues and what impact their operations have on people and the environment.

The directive took effect in 2023 and will be applied starting January 1st, 2024, i.e., the new rules have to be applied to sustainability reports for the financial year 2024. This also means that the stakes of being compliant are rising.

Before, companies that had to publish non-financial reports had a certain degree of freedom in content creation, allowing them to demonstrate their ambitions and commitment to ESG in their own way. From 2024 onwards, however, non-financial reporting will be standardized and more accessible to relevant stakeholders such as clients, customers, and regulators, i.e., companies’ sustainability efforts will be more transparent and comparable.

This has several implications. For one, companies are now hurrying to review their compliance systems and set up the right tools and processes to gather the information that has to be included in sustainability reports. They want to avoid any form of punishment that may come with CSRD non-compliance as it would involve hefty fines and may even lead to prison sentences.

Furthermore, increased transparency will also heighten the pressure on companies from 2024/2025. Customers will be able to make more informed purchase decisions based on sustainability considerations. Business risks associated with ESG will also play an even bigger role in financial institutions’ investment decisions.

NGOs and activist groups will find it easier to scrutinize business activities and, if necessary, file complaints and get the attention of the press. All this implies that businesses could lose significant market value if they are unable to comply with the CSRD.

At PAC, we consider the coming into force of the CSRD as an accelerator of the shift towards more sustainability in business. In the past, companies have mainly been interested in sustainability because their customers have asked for it and it has provided an opportunity to drive revenues. Now the topic has turned into an obligation that can cause legal, reputational, and commercial damage.

To avoid that, we believe companies will look for partners that will help them implement the right software solutions and processes to efficiently track, gather, and analyze data related to ESG, and facilitate the creation of sustainability reports according to the ESRS standard.

At the same time, the comparability of the reports will push businesses to showcase that they are at least as sustainably driven as their direct competitors. Therefore, we suspect that the increasing importance of ESG as a selling point will also lead to a rise in sustainability-related projects, affecting the sustainability of and through IT. All in all, ESG investments will increase sharply to ensure compliance and improve customer attraction and retention.

Visual AI is conquering the factory – digital quality control is a killer application in industrial IoT

Predictive maintenance has been hyped as a key use case in the industrial IoT context for many years, while AI-based visual inspection used to be more of an afterthought. Customers confirm that generating value from predictive maintenance use cases is often difficult; generating value from AI-based visual inspection is much easier and provides genuine benefits.

It is certainly true that cameras have been deployed in factories for over a decade to check production quality. However, a typical challenge in this space is the pseudo scrap rate, i.e., the frequency with which products are falsely identified as defective. AI can help avoid this.

AI-based visual inspection uses high-definition cameras to capture visual data which is then processed and analyzed by AI algorithms. These algorithms can identify patterns, anomalies, or specific features that might be difficult to detect by humans, therefore avoiding pseudo-scrap.

We observe solid proof points in the market that illustrate the value created, the level of maturity reached, and further willingness among manufacturers to invest in this technology for production purposes. Based on AI-powered optical inspection, Advanced Semiconductor Engineering in Taiwan reduced scrap costs by 67%, Agilent Technologies in Singapore improved labor productivity by 31%, and Haier in China increased inspection efficiency by 50%.

Thanks to advancements in AI-based vision systems and the clear value that digital quality control systems provide to manufacturing operations, manufacturers around the world have invested heavily in this use case over the past few years and have already reached a very advanced level of maturity.

42% of manufacturing companies confirmed in a survey that they had already reached a high level of maturity for this use case. In addition, 44% of manufacturing companies around the world said in our survey that they were planning further substantial investments in this space. All this illustrates that digital quality control is a real “killer application” in industrial IoT.

Sustainability is becoming a key factor in opting for cloud solutions

The sustainability of IT is a key aspect of infrastructure considerations and, lately, of software development initiatives, too. Many organizations have to, or will soon have to, comply with increasingly strict ESG reporting requirements (in the EU and elsewhere).

Running IT internally leads to scope 2 emissions linked to electricity consumption. To reduce this type of emissions, organizations can either modernize their existing data centers or build new ones, choose infrastructure hosting (which involves scope 3 emissions), or migrate the infrastructure to the cloud (which also falls under scope 3).

Most organizations in unregulated markets pursue a cloud-first strategy that is fueled by sustainability and reporting needs. In addition to the possibility of migrating individual workloads to the cloud with reasonable effort and operating costs, organizations will increasingly ask how sustainable different cloud offerings are. When selecting a cloud provider, sustainability will be a key factor, alongside the availability of service provider resources and adequate features in the PaaS environment.

Given the varying degrees of sustainability efforts made by the hyperscalers and by smaller cloud providers, we at PAC expect that starting in 2024, there will be massive efforts that will radically change past goals and timelines, not least because the cloud providers also have to meet certain reporting requirements.

In addition, we expect cloud providers to provide efficiency parameters for prospects to show how sustainable their offerings are. Power Usage Effectiveness (PUE), Green Energy Coefficient (GEC), Temperature Per Cabinet, and Water Usage Effectiveness (WUE) are the minimum indicators a cloud provider should provide per data center/region. Cloud providers that do not even offer this basic information will be immediately ruled out by most organizations unless they provide a unique service that is needed and not available elsewhere.